Last year, graphics processing chip manufacturer NVIDIA released a new AI platform for video calls. The AI platform, Maxine, offers several intriguing features, including the game-changing ability to make it look like you’re paying attention when you’re not. Now, NVIDIA scientists have come up with another video-conferencing AI; one that’ll let people use what are essentially deepfake versions of themselves for calls.

In the video below, YouTuber and software engineer, Károly Zsolnai-Fehér, describes NVIDIA’s recent R&D endeavor. The company’s obviously building out Maxine, and looking for ways to make video conferencing better in general.

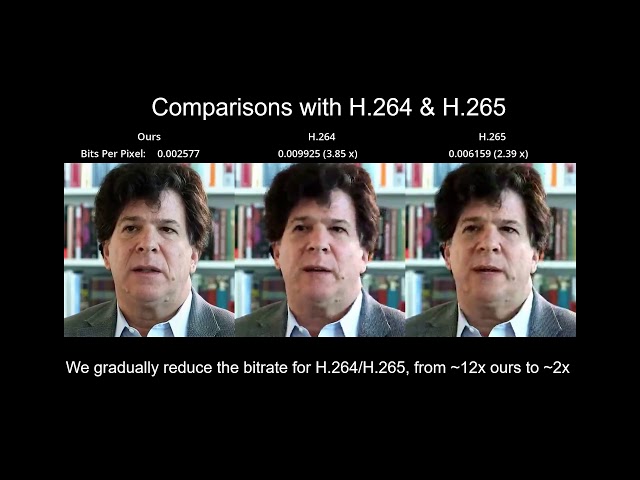

The purpose of this particular AI is to lessen the amount of information that needs to be transmitted through the network in order to have clear video calls. I.e. this AI is able to much more efficiently compress the data necessary to talk via a program like Zoom or Skype. But the way it achieves that efficiency is downright creepy.

As Zsolnai-Fehér explains, the AI works by taking the first image it sees of a caller’s face, then throws out any subsequent video. Then, instead of transmitting a video of the caller to another person, it takes that image, develops a simulated version of the caller, and transmits that version to the other person. The AI literally makes a complete, (mostly) indistinguishable doppelgänger.

To make digital double, all the AI needs is two pieces of information—how someone’s head moves over time, and how their expressions change—and then it’s off to the races. Zsolnai-Fehér notes that the AI can even rotate callers’ heads and fix framing issues. Which again, sound like ways to make it seem like someone’s paying attention when they’re not.

It’s not clear if NVIDIA will deploy this AI to Maxine at some point, but we can’t imagine they won’t. The AI’s developers say it’s ten times more efficient than current video-calling methods. It also allows for “videos” of people to remain crystal clear when they’d otherwise become choppy due to latency. Which sounds like it’d be mostly a good thing. (Although sometimes glitchy video does allow for critical drink sips and positional adjustments.)