Anybody who owns a dog knows that their dog has the cutest smile in the world. (Somehow every dog in the world has the cutest smile in the world, it’s just a law of the universe.) Which is why it’s so very important for dog owners to take a couple of minutes and use NVIDIA’s new AI tool that allows people to slap a dog’s smile onto a seemingly endless array of animals. Although fair warning, some of the mash-ups are anything but cute.

A GAN is a type of machine learning system that pits one neural network against another in order to achieve a desired result, which, in this case, is a mash-up of a dog’s smile and some random animal’s face. In essence, GANs work by having programmers show one neural network, the generative network, a sample of a target image, which it then tries to recreate with some variance while still maintaining the essence of the target image. Then another neural network, the discriminative network, tries to determine if the images the generative network has created are valid images or not. (With valid images being determined by whatever criteria the programmers have designated for the discriminative network.)

Just announced on the @NVIDIA #AI Playground, GANimal, a generative adversarial network that allows you to project your pet’s characteristics onto everything from a Lynx to a Saint Bernard. Try it out for yourself below and read more about it here: https://t.co/Hj9hi7gnqJ

— NVIDIA AI Developer (@NVIDIAAIDev) October 28, 2019

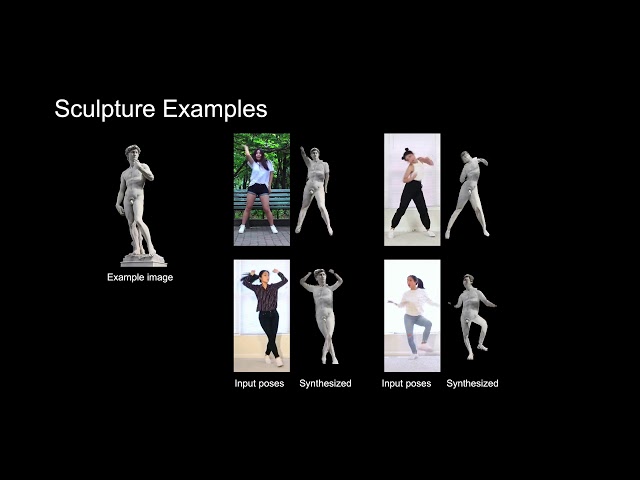

The reason GANimal is worth noting is because it requires far fewer images in order to be “trained” on how to generate acceptable target images. While many other GANs require enormous data sets in order to learn how to make images acceptable by the discriminative network’s standards, GANimal only needs to provide the generative network with a single image. This is why the researchers behind GANimal refer to the series of algorithms that power it as FUNIT or “Few-shot, UNsupervised Image-to-image Translation.”

“In this case, we train a [neural] network to jointly solve many translation tasks where each task is about translating a random source animal to a random target animal by leveraging a few example images of the target animal,” Ming-Yu Liu, an NVIDIA computer-vision researcher behind GANimal, is quoted as saying in an NVIDIA blog post outlining how the machine learning system works. He adds that “Through practicing solving different translation tasks, eventually the network learns to generalize to translate known animals to previously unseen animals.”

In other words, GANimal is able to learn from one instance of applying a dog’s smile to an animal, say, a zebra, and figure out based on the visual patterns required to make that target combo image work how to apply a dog’s smile to any other animal in the same fashion. Meaning the generative network learns very quickly how to generalize the visual patterns needed to fool the discriminative network into thinking it’s made valid target images.

The NVIDIA blog post notes that Liu’s ultimate goal is to make AI that’s able to work in the same way the human imagination does—people don’t need thousands or millions of images to identify visual patterns, and soon, with machine learning breakthroughs like FUNIT, AI won’t need that many either. Liu et al.’s FUNIT research paper, which can be found here, is being presented at this year’s International Conference on Computer Vision in Seoul.

What do you think of GANimal? What kinds of weird, cute, or downright frightening images did this tech generate using your pup’s smile? Show us some resultant pictures in the comments!

Images: NVIDIA