In his 1942 short story “Runaround,” legendary science fiction author Isaac Asimov clearly outlined his “Three Laws of Robotics,” which could, at least in fictional worlds, fundamentally underpin autonomous robots’ behavior. The last of the three laws states, in part, that, “A robot must protect its own existence…” This may turn out to be quite necessary for the development of real-life autonomous robots as well, especially when it comes to their ability to feel. At least that’s the argument being made in a recently published white paper outlining the significance of self-preservation in robots.

The paper, which comes via Futurism, was recently published in the journal Nature Machine Intelligence by Antonio Damasio and Kingson Man of the University of Southern California’s Brain and Creativity Institute (BCI); Damasio is one of the institute’s directors, and Man is one of its research scientists. In the paper, available in full here for free, Damasio and Man argue that in order to develop “feeling machines,” they must be programmed with the task of maintaining homeostasis. So if engineers want to build machines that feel and empathize with what people feel, the machines are going to have to care about their own health.

we wrote a thing. hope you like it.

How do we design machines with something akin to feeling? (1/n)

“Homeostasis and soft robotics in the design of feeling machines” Man & Damasio 2019 Nature Machine Intelligence. https://t.co/fhM4ZbuP49

Free read link: https://t.co/VuManltFCJ pic.twitter.com/HDczSw0FeH— Kingson Man (@therealkingson) October 9, 2019

The paper is about seven pages long and is rich with the logical sequence of steps that comes to the conclusion that machines need to worry about their own state of “mind” and “body” in order to interact effectively with we meat bags (a term borrowed from the greatest fictional robot of all time). In essence, Damasio and Man are saying that just as biological life needs to be concerned with its own well-being to have meaningful interactions with its environment, so too will genuinely autonomous robots.

That is to say, caring about one’s own self—one’s own temperature, level of hunger, amount of sleep, etc.—is a critical part of what gives life any meaning at all. The researchers say as much, in regards to robots, when they note, “This elementary concern would infuse meaning into [a machine’s] particular information processing” capabilities.

This intuitively makes sense because maintaining homeostasis is a significant impetus for—perhaps the only impetus for—biological organisms having any goals whatsoever. If you’re cold, your goal is to find some way to get warm; if you’re hungry, your goal is to find food; if you’re tired, your goal is to find somewhere to sleep; if you’re bored, your goal is to use any one of the bazillion streaming services currently available until you’re hungry or sleepy, at which point you circle back to goal two or three. (We’re kidding to some extent with that last one, of course, but you get the point.)

The paper describes the concept succinctly when it notes that “machines capable of implementing a process resembling homeostasis might… acquire a source of motivation and a new means to evaluate behaviour, akin to that of feelings in living organisms.”

The natural force of homeostasis, present in the simplest organisms, carried over into organisms with nervous systems, was expressed as feelings, and motivated creative reason. It has remained pervasive in cultures. #TheStrangeOrderofThings

— Antonio Damasio (@damasiousc) March 6, 2018

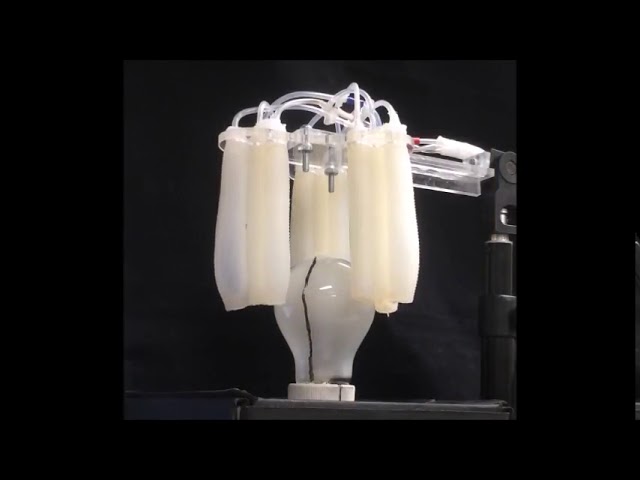

In terms of how robots could develop their own intuitive sense of homeostasis, Damasio and Man write that sensors, in a very real sense just like our own biological ones (eyeballs, ears, taste buds, etc.), are the answer to that problem. They specifically discuss human skin as an example of a biological sensor, or more specifically, an amalgam of biological sensors, that could be duplicated in robotic builds by utilizing “soft robotic” materials. They note that “a soft electronic ‘skin'” made with an “elastomer base with droplets of liquid metal that, on rupture, cause changes in electrical conductivity across the damaged surface,” have already been developed by researchers and could be deployed on robots as one way of monitoring homeostasis.

But even though a robot covered in “skin” that senses tears or leaks may be able to develop a sense of homeostasis, and thusly some kind of “feeling,” it seems that this evolution would inevitably lead to what can only be described as vulnerability. This is exactly what Damasio and Man are aiming for, however, and it seems they believe that vulnerability necessarily goes hand-in-hand with sensing homeostasis. This would mean vulnerability, for both robots and people, necessarily goes hand-in-hand with having feelings. “Rather than up-armouring or adding raw processing power to achieve resilience,” the authors write, “we begin the design of these [feeling] robots by, paradoxically, introducing vulnerability.”

A video demonstrating a soft robotic sensor skin developed by researchers at UC San Diego.

“Homeostatic robots might reap behavioural benefits by acting as if they have feeling,” Damasio and Man write in their conclusion, adding that “Even if they would never achieve full-blown inner experience in the human sense, their properly motivated behaviour would result in expanded intelligence and better-behaved autonomy.” And even if robots never understand why something feels good, or whether they experience a feeling of “goodness” at all, creating better-behaved autonomous robots seems like a worthwhile goal: It could potentially work to help preserve the homeostasis of our entire species, after all.

What do you think of Damasio and Man’s paper on “feeling machines”? Do you think robots need to have a sense of homeostasis in order to develop empathy, or do they just need to do what they’re told? Give us your thoughts in the comments if that action will help you maintain homeostasis!

Feature image: Dick Thomas Johnson