Doron Adler and Justin Pinkney, two software engineers, recently released a “Toonification translation” AI model that turns real faces into flawless cartoon representations. And while the toonification tool, “Toonify,” was originally available to the public, it became too popular to sustain cheaply. But some people managed to Toonify a ton of celebrities before the tool was pulled, and all the animations are stellar.

After much training of neural networks @Norod78 and I have put together a website where anyone can #toonify themselves using deep learning!https://t.co/OQ23p30isC

— Justin Pinkney (@Buntworthy) September 16, 2020

In a series of blog posts, which come via Gizmodo, Pinkney outlines how he and Adler created Toonify. Pinkney, who’s based in the U.K., says he began the process using a generative adversarial network (or GAN) referred to as StyleGAN2. A GAN is a type of machine learning system that pits one neural network against another in order to achieve a desired result; in this case, selecting for the best Toonified faces.

bycloud

Using StyleGAN2, which is able to create stunningly realistic faces (each one of the faces on thispersondoesnotexist.com, for example, is completely fake) Doron was able to create a modified GAN that somewhat Toonifies a face. He did so by feeding StyleGAN2 a series of roughly 300 images of characters from animated films.

Blending humans and cartoons using @Buntworthy's Google Colab notebook. Thank you for that, it's awesome. Here is a YouTube version of this video: https://t.co/7bUd7nXaX3 pic.twitter.com/iG09lpEAXX

— Doron Adler (@Norod78) August 23, 2020

But there was still a hiccup in the Toonification process. The problem was with the dataset and the fact that it contained characters from different types of animated films. The list included everything from Moana to The Little Mermaid to the original Snow White and the Seven Dwarves. And that meant very different animation styles.

bycloud

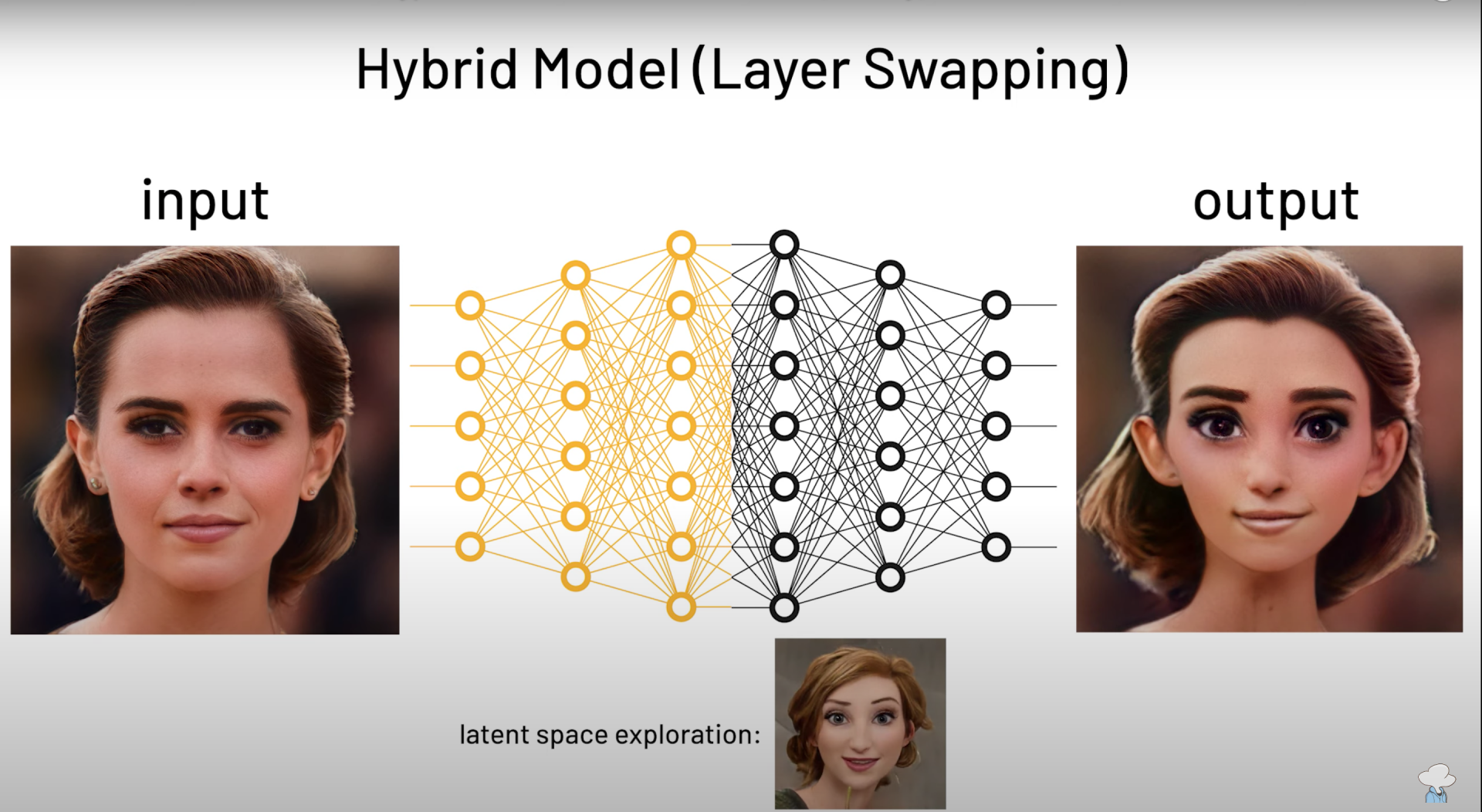

To address this issue, the software engineers say they swapped different layers of the model around to affect the Toonified faces in different ways. The “layers,” referred to in this context are different subsets of code that “learn” about different aspects of data, and, subsequently, are able to spot patterns and generate images accordingly.

bycloud

More specifically, the software engineers say they programmed Toonify so that lower resolution layers affect the pose of the head and shape of the face, while higher resolution ones are responsible for things like texture and lighting. For a fuller description of the process, YouTube user, bycloud, gives a great, short explainer in the video below.

Moving forward, Adler and Pinkney say they’re working on a way to deliver Toonify to the masses again. It seems they’re leaning away from having ads, and are still seeking a financial solution. Once they solve that problem, however, there’s no doubt cartoon profile photos will flow like the spice.

Feature image: bycloud