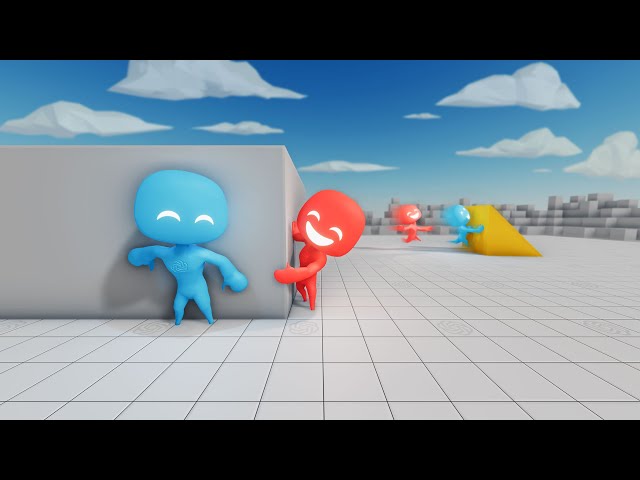

Okay everybody, time to gather around again and see what kind of madness has been conjured by OpenAI, the multi-billion-dollar non-profit that keeps cranking out one ridiculous AI breakthrough after another. Most recently, the company released the very human-sounding GTP-2 language modeler, and now it has just dropped a new video of virtual agents learning to play hide-and-seek. And while the agents are super cute, the implications of their ability to learn in changing environments is Macaulay Culkin Home Alone face to the max.

In this virtual hide-and-seek clip (which comes via Digg), OpenAI shows off agents learning how to play the simple children’s game from scratch. We watch as the agents, which are essentially software bots that have the “goal” of winning the game, progressively become so proficient that they even invent more ways of exploring the virtual environment than thought possible by their programmers.

The most astounding aspect of this demonstration is the fact that OpenAI says that it could be a proof-of-concept for the idea that this kind of gameplay can more or less be used to evolve virtual agents that are “truly complex and intelligent.” The video notes that the agents are already programmed with an animal-like intelligence, in that they keep trying to figure out what set of actions they need to take in varying environments in order to achieve a desired reward.

OpenAI

OpenAI

Another crucial point here is that these agents are learning without supervision. This means that none of the strategies the agents use to hide from and seek each other are programmed into them; every action the little blue and red team players are performing is self-taught, and stems necessarily from their core purpose (or utility function) of winning the game.

All of this becomes even crazier when considering the fact that OpenAI is also working on machines that can intelligently manipulate the real world, as evidenced in the “Dactyl” robo-hand video below.

If OpenAI can combine the learning capabilities of their virtual agents (once they’ve been trained in far more complex environments) with their dextrous limb prototypes, it seems like a short leap to imagine a very fast-learning robot that can manipulate the real world. A very fast-learning robot that can also track you down no matter where you hide.

What do you think of these virtual hide-and-seek agents? Are they a great proof-of-concept for more complex virtual intelligence, or just some bots that are very good at learning how to play a specific game? Let us know in the comments!

Images: OpenAI