Prepare your tinfoil hats, everyone, ’cause a company in Russia is developing an AI-powered mind-reading device that can assemble an image of what a person is looking at solely based on their brain waves—kind of. The technology is obviously nascent, which means it’s mostly just identifying what category of thing a person is looking at, but its creators say that, in time, it could be effective enough to give Elon Musk’s surgically implanted Neuralink a run for its money.

Futurism reported on the mind-reading device, which is the brainchild (puns sustain us as humans) of the Russian corporation, Neurobotics, and the Moscow Institute of Physics and Technology (MIPT). Researchers behind the device published their findings in the journal bioRxiv, highlighting the fact that they want to use the technology to improve stroke victims’ ability to control rehabilitation devices with their minds.

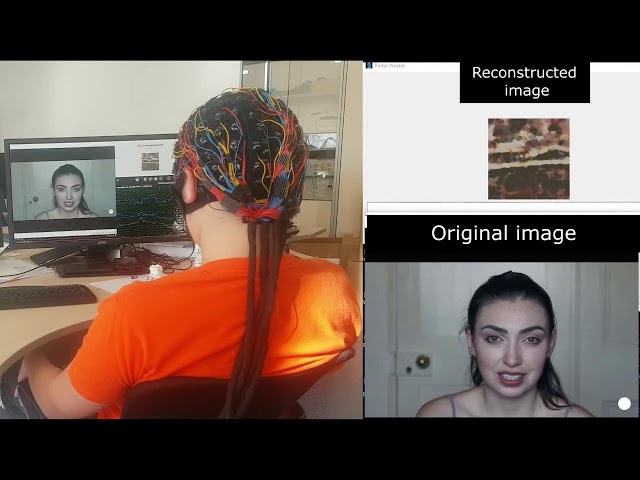

In an MIPT press release, it’s noted that the device works by using neural networks that are able to decode the images people are looking at based on electroencephalography (EEG) measurements recorded by the electrode-covered cap. EEGs measure the electrical activity in the brain using electrodes, in part by measuring neural oscillations, which are commonly referred to as “brain waves.”

The devil, as always, is in the details, however, and it doesn’t seem that the device simply recreates a digital image of whatever somebody is looking at. Instead, it seems that there are two neural networks that work together to decide what category of thing a person is looking at. This is made possible by training one neural net to turn the brain waves people were producing while looking at a certain category of image into “noise,” and another neural net that could then take that noise and turn it into intelligible images.

A background video on Neurobotics (featuring mind-controlled drone).

But the neural net that takes the brain wave measurements from the EEG and turns them into noise for the next neural net is low resolution, and only able to determine what category of thing somebody is looking at. (Categories in this trial included shapes, human faces, moving mechanisms, motor sports, and waterfalls, although the researchers noted in their paper that they want to expand the number of categories.) Then, the additional neural net, it seems, takes the category into consideration when turning the noise into a proper image that can be seen.

It seems like a fair metaphor to describe this method of mind reading as bowling with bumpers—yes, the neural networks are reconstructing real-time images of whatever a person is looking at, but they already know the images are going to fall into one of a limited number of categories, which themselves give the neural nets a lot to start with.

Still though, the goal to help stroke victims with their recovery processes is obviously honorable, and the odds of this tech improving are presumably sky high. Grigory Rashkov, a researcher at MIPT and a programmer at Neurobotics, is quoted in the MIPT press release as saying that “we can use this [device] as the basis for a brain-computer interface operating in real time,” and that it could be more reasonable than Elon Musk’s neural implant, which faces “the challenges of complex surgery and rapid deterioration due to natural processes.” Regardless of which type of device wins out, everybody should still be gearing up to watch what they think. Or wear one of those tinfoil hats.

What are your thoughts on this mind-reading device? We don’t have one yet so you’ll just have to let us know in the comments!

Images: NeuroboticsRU